Author: hciadmin

Catchers who analyzed the NOX datasets

This is a list of online volunteers (aka “Catchers”) who played Stall Catchers to analyze Alzheimer’s research data for the Schaffer-Nishimura Laboratory in the Biomedical Engineering Department, Cornell University. The […]

Crowd2Map Tanzania

Crowd2Map Tanzania is a crowdsourced initiative aimed at creating a comprehensive map of rural Tanzania, including detailed depictions of all of its villages, roads and public resources (such as schools, […]

EyesOnALZ on PBS in Episode 1 of The Crowd and The Cloud

EyesOnALZ, a project led by the Human Computation Institute, will be among four projects featured in the premiere episode of The Crowd and The Cloud, a National Public Television mini-series […]

EyesOnALZ project launches the first citizen science game to fight Alzheimer’s

Funded by a grant from the BrightFocus Foundation, HCI has been collaborating with Cornell, Berkeley, Princeton, WiredDifferently, and SciStarter to develop a platform for crowdsourcing the AD research being done […]

Workshop report calls for National Initiative

The Computing Community Consortium has just announced that the workshop report from the Human Computation Roadmap Summit has been published and is available for download as a PDF file here. This report describes […]

Crowdsourcing Alzheimer’s Research

Human Computation Institute is leading an initiative to develop an online Citizen Science platform that will enable the general public to contribute directly to Alzheimer’s Disease research and possibly lead to a […]

Computational Complexity and Popsicles

“Wicked problems“[1][2], such as climate change, poverty, and geopolitical instability, tend to be ill-defined, multifaceted, and complex such that solving one aspect of the problem may create new, worse problems. […]

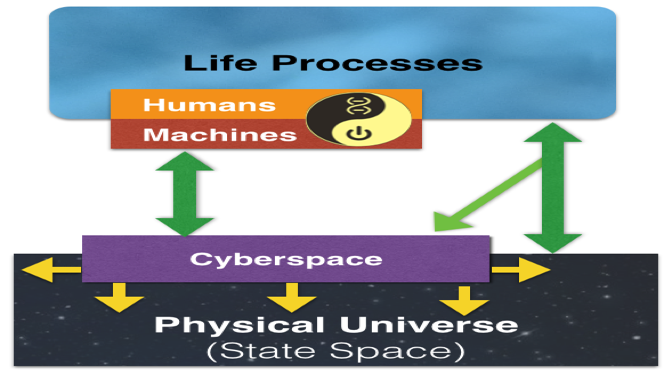

Life, the Universe, and Information Processing

Abstract (the full paper is available here as a PDF) Humans are the most effective integrators and producers of information, directly and through the use of information-processing inventions. As these […]

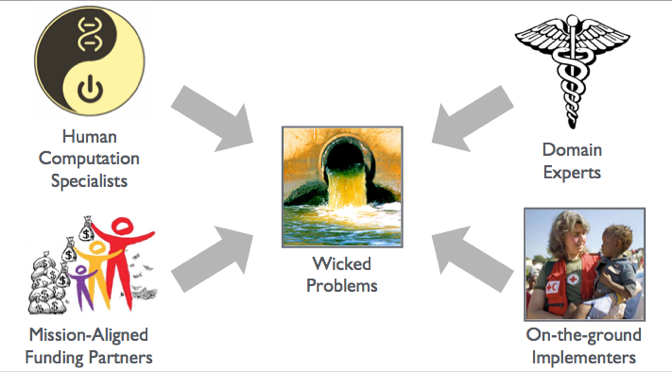

Solving wicked problems is a four-way partnership

What is a wicked problem? “Wicked problems” are intractable societal problems (e.g., climate change, pandemic disease, geopolitical conflict, etc.), the solutions of which exceed the reach of individual human cognitive abilities[1]. […]